Reducing hallucinations in LLM agents with a verified semantic cache using Amazon Bedrock Knowledge Bases

AWS Machine Learning

FEBRUARY 21, 2025

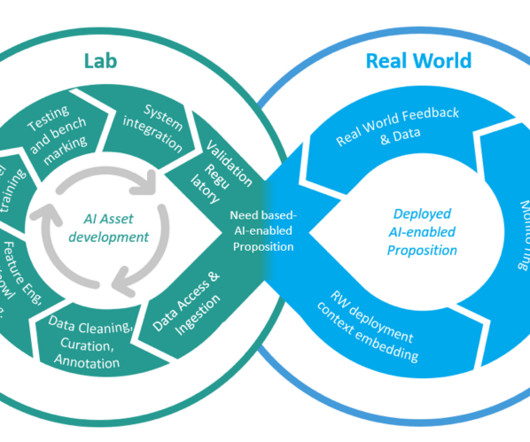

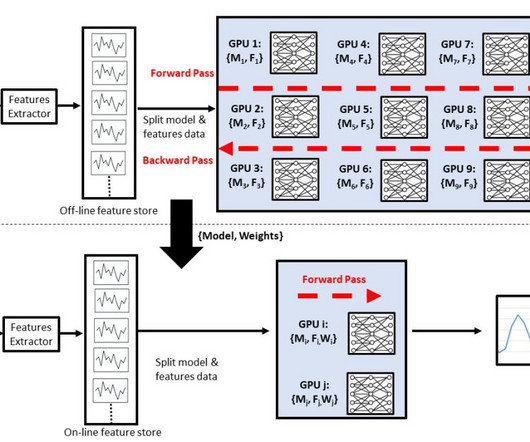

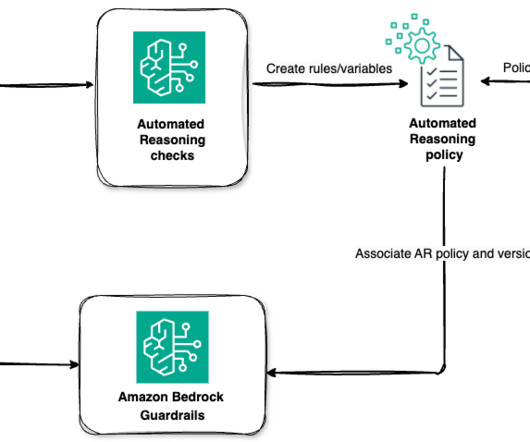

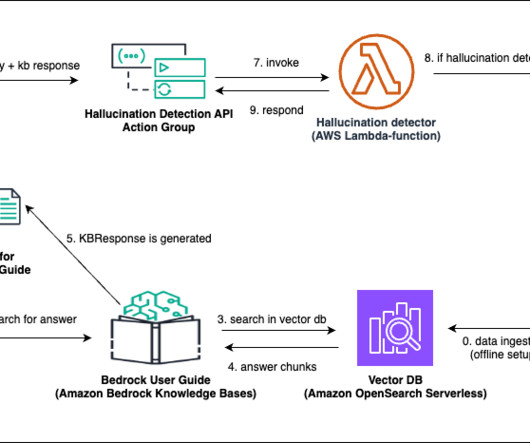

Solution overview Our solution implements a verified semantic cache using the Amazon Bedrock Knowledge Bases Retrieve API to reduce hallucinations in LLM responses while simultaneously improving latency and reducing costs. Lets assume that the question What date will AWS re:invent 2024 occur? is within the verified semantic cache.

Let's personalize your content