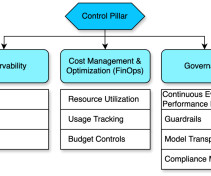

Governing ML lifecycle at scale: Best practices to set up cost and usage visibility of ML workloads in multi-account environments

AWS Machine Learning

NOVEMBER 14, 2024

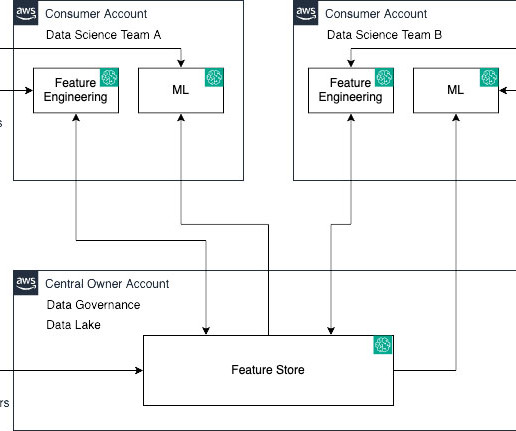

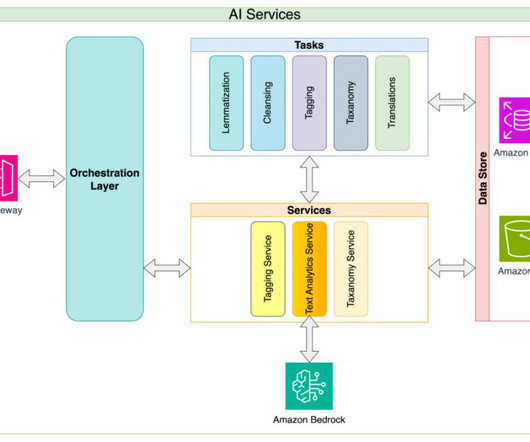

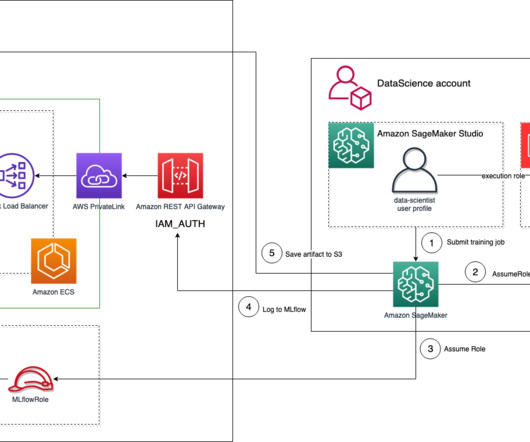

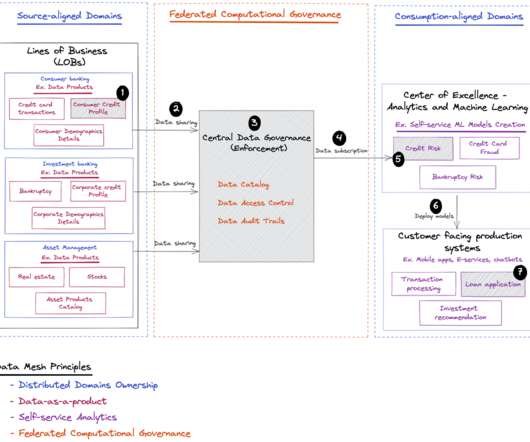

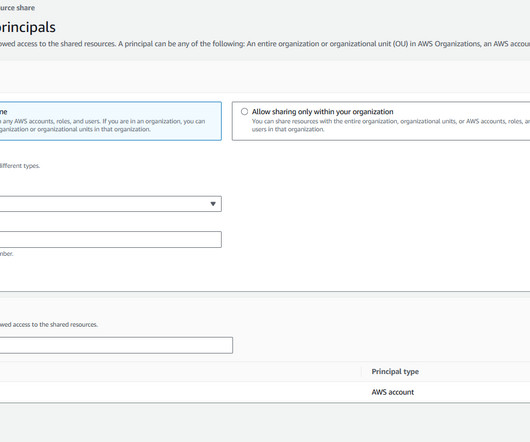

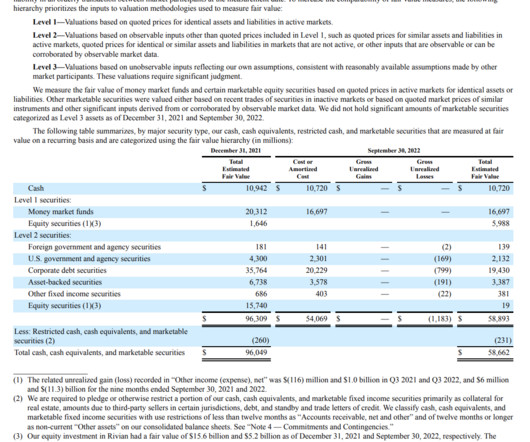

For a multi-account environment, you can track costs at an AWS account level to associate expenses. A combination of an AWS account and tags provides the best results. Tagging is an effective scaling mechanism for implementing cloud management and governance strategies.

Let's personalize your content