GraphStorm 0.3: Scalable, multi-task learning on graphs with user-friendly APIs

AWS Machine Learning

AUGUST 2, 2024

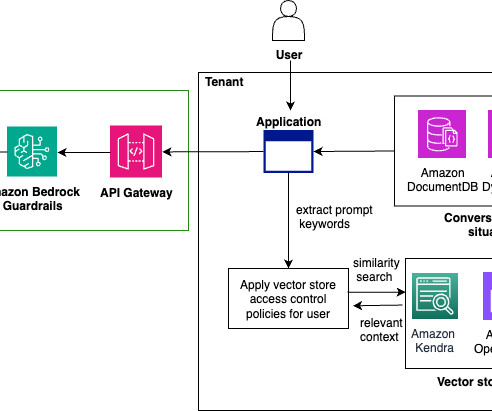

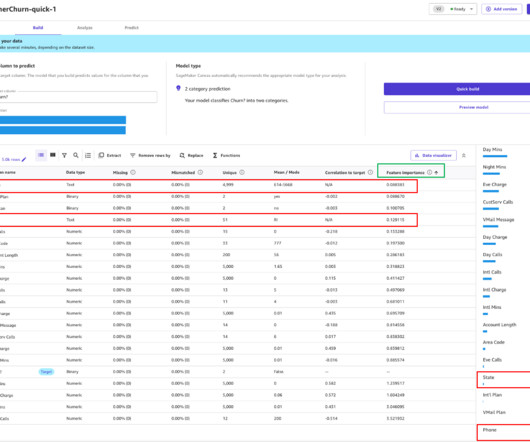

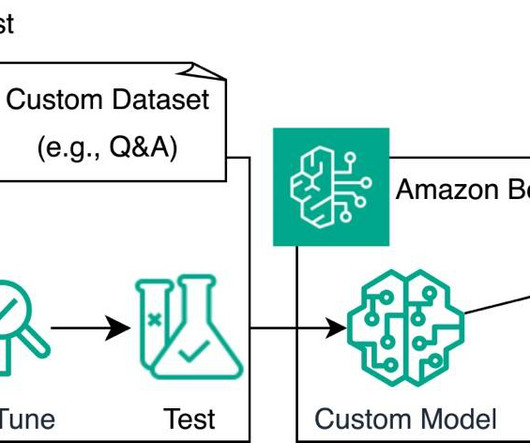

With GraphStorm, you can build solutions that directly take into account the structure of relationships or interactions between billions of entities, which are inherently embedded in most real-world data, including fraud detection scenarios, recommendations, community detection, and search/retrieval problems. Specifically, GraphStorm 0.3

Let's personalize your content