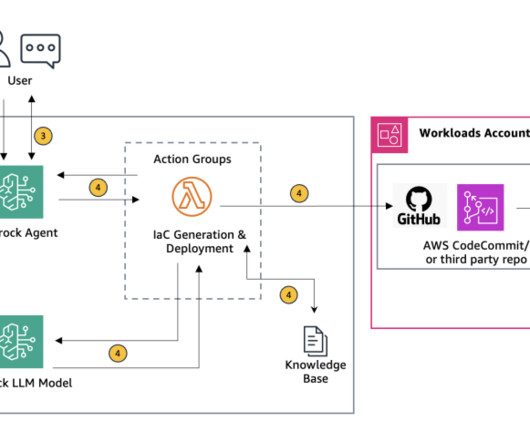

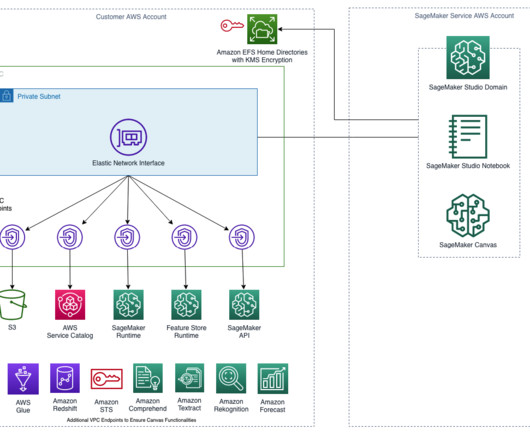

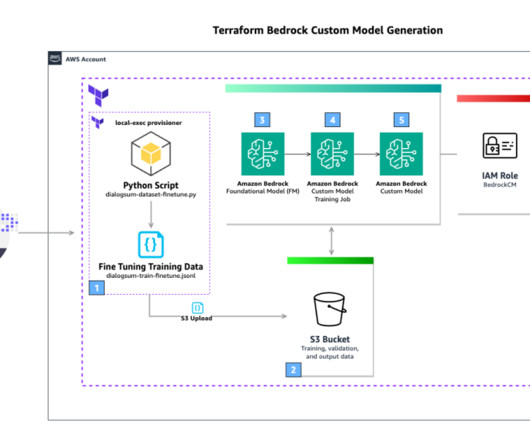

Generate customized, compliant application IaC scripts for AWS Landing Zone using Amazon Bedrock

AWS Machine Learning

APRIL 18, 2024

Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Stability AI, and Amazon with a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

Let's personalize your content