Govern generative AI in the enterprise with Amazon SageMaker Canvas

AWS Machine Learning

SEPTEMBER 23, 2024

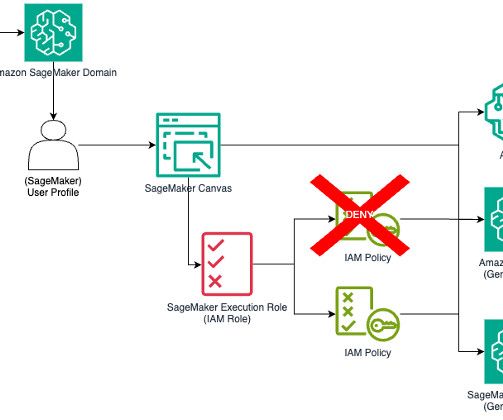

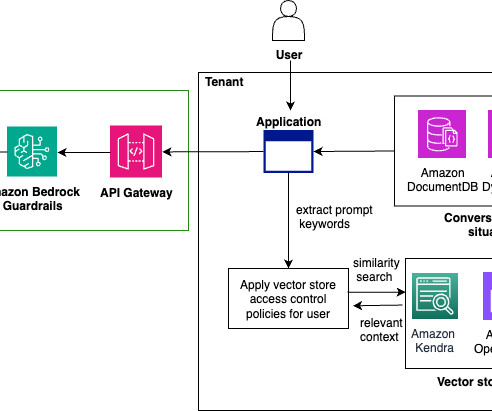

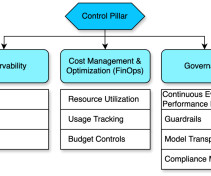

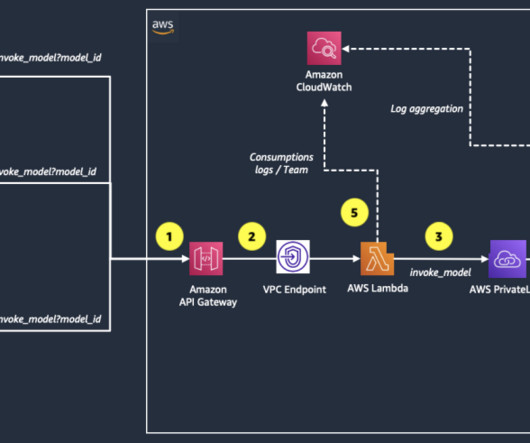

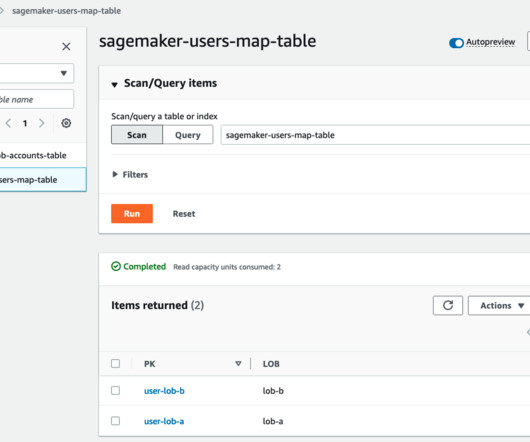

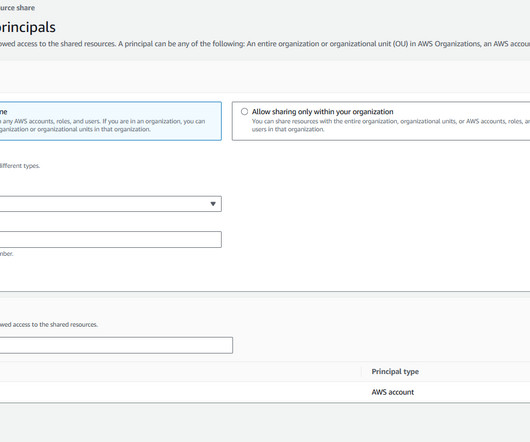

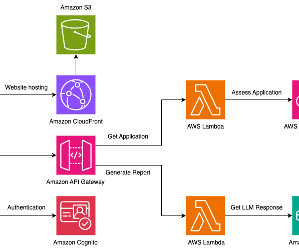

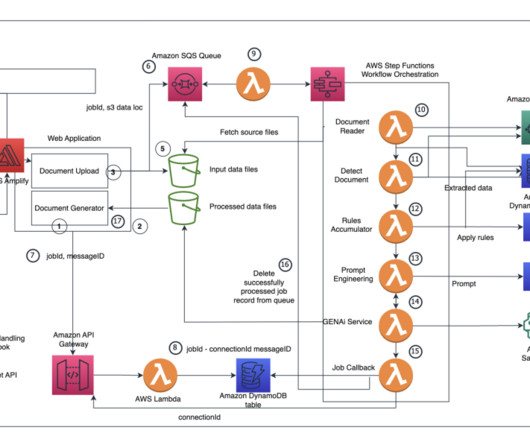

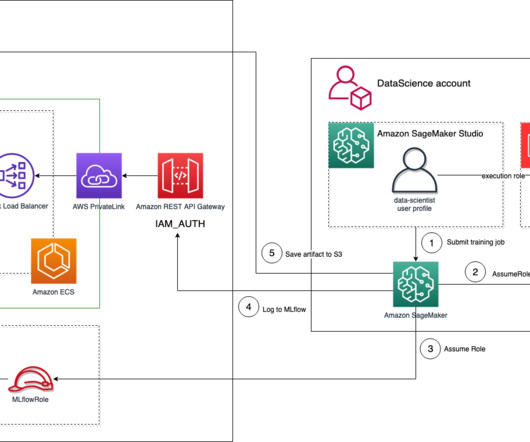

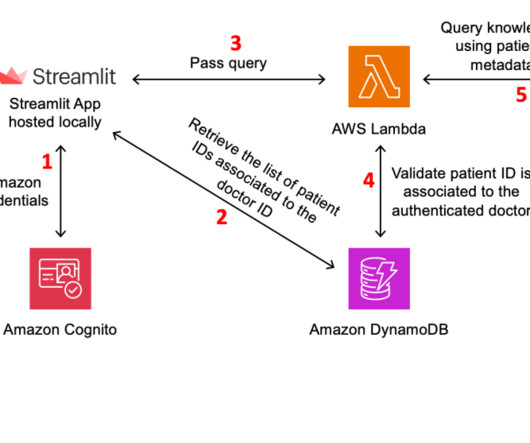

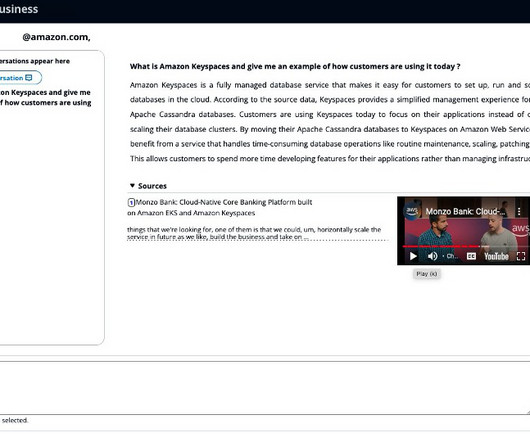

This is crucial for compliance, security, and governance. In this post, we analyze strategies for governing access to Amazon Bedrock and SageMaker JumpStart models from within SageMaker Canvas using AWS Identity and Access Management (IAM) policies. We provide code examples tailored to common enterprise governance scenarios.

Let's personalize your content