Generate training data and cost-effectively train categorical models with Amazon Bedrock

AWS Machine Learning

MARCH 27, 2025

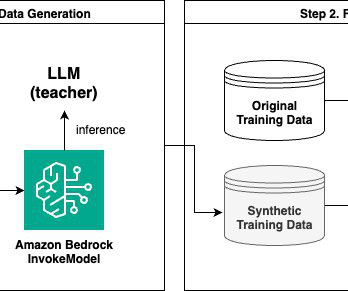

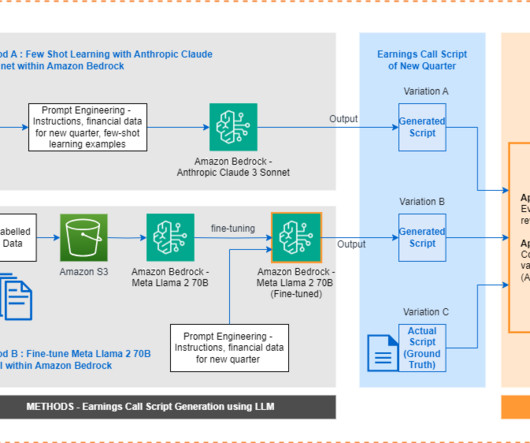

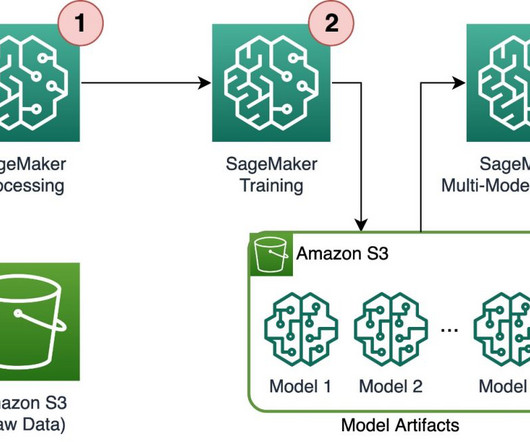

In this post, we explore how you can use Amazon Bedrock to generate high-quality categorical ground truth data, which is crucial for training machine learning (ML) models in a cost-sensitive environment. This results in an imbalanced class distribution for training and test datasets.

Let's personalize your content