Benchmarking Amazon Nova and GPT-4o models with FloTorch

AWS Machine Learning

MARCH 11, 2025

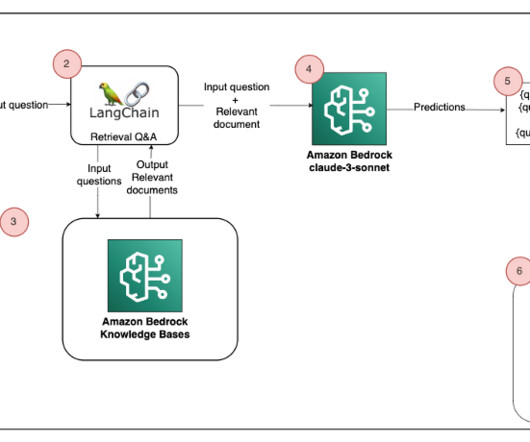

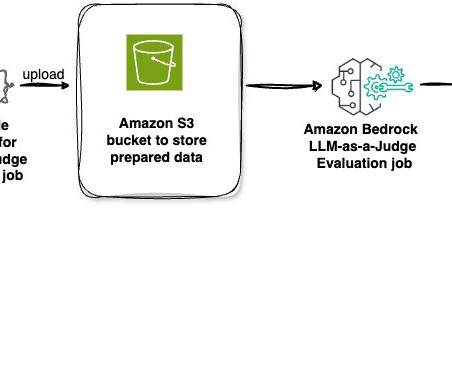

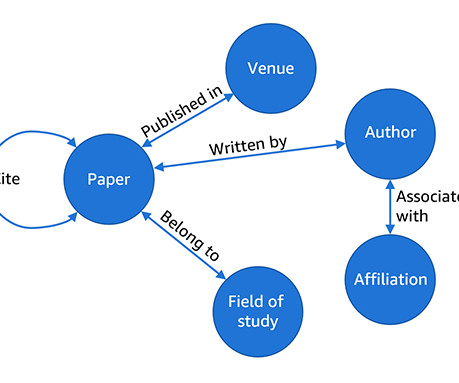

These models offer enterprises a range of capabilities, balancing accuracy, speed, and cost-efficiency. Using its enterprise software, FloTorch conducted an extensive comparison between Amazon Nova models and OpenAIs GPT-4o models with the Comprehensive Retrieval Augmented Generation (CRAG) benchmark dataset.

Let's personalize your content