Integrate HyperPod clusters with Active Directory for seamless multi-user login

AWS Machine Learning

APRIL 22, 2024

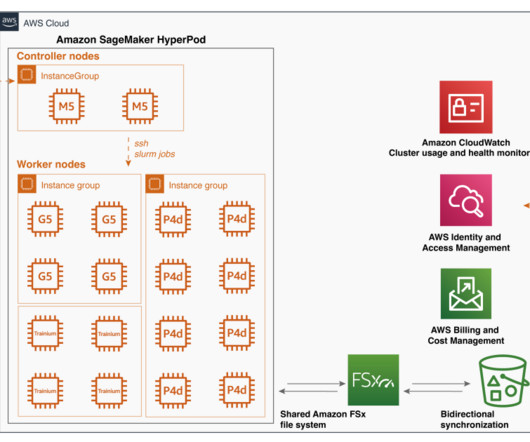

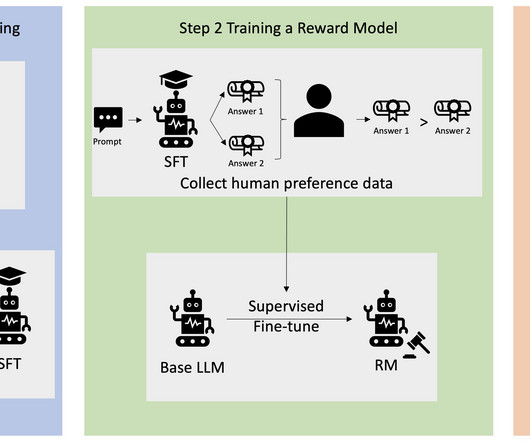

To achieve this multi-user environment, you can take advantage of Linux’s user and group mechanism and statically create multiple users on each instance through lifecycle scripts. For more details on how to create HyperPod clusters, refer to Getting started with SageMaker HyperPod and the HyperPod workshop. strip(), pysss.password().AES_256))"

Let's personalize your content