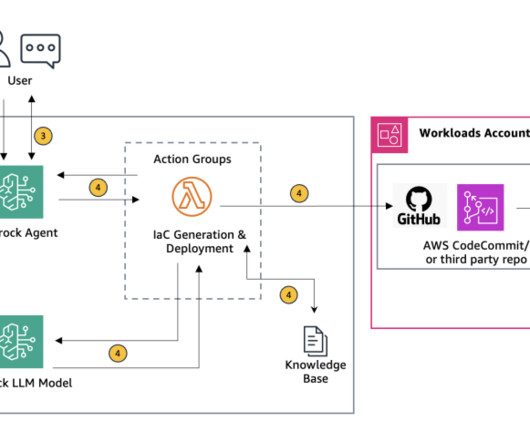

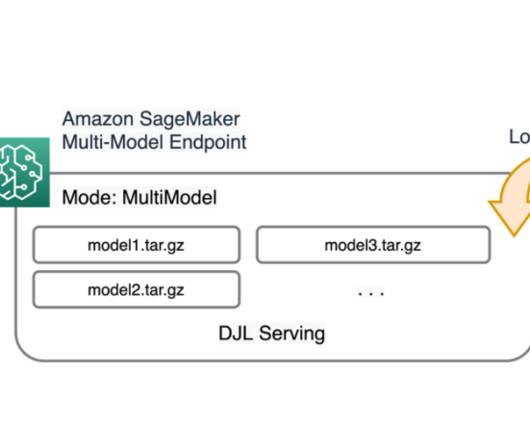

Generate customized, compliant application IaC scripts for AWS Landing Zone using Amazon Bedrock

AWS Machine Learning

APRIL 18, 2024

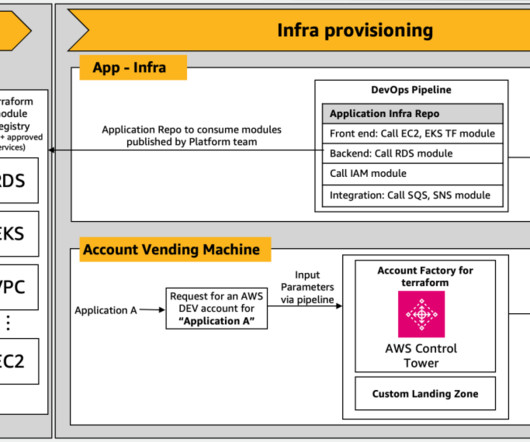

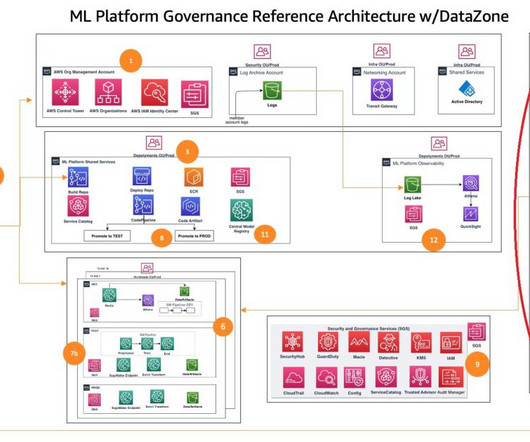

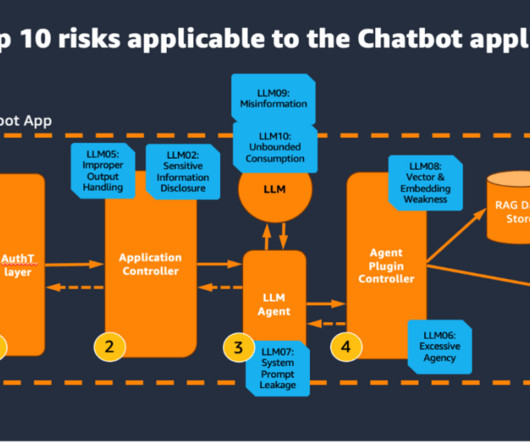

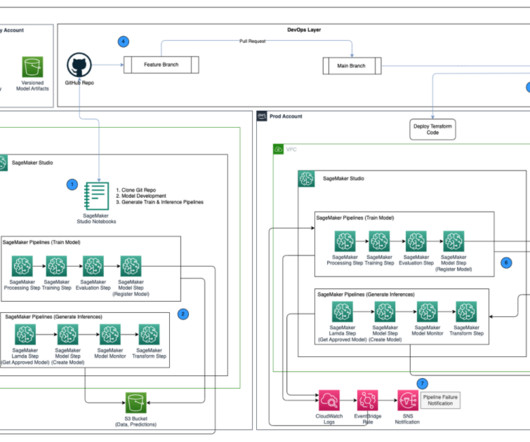

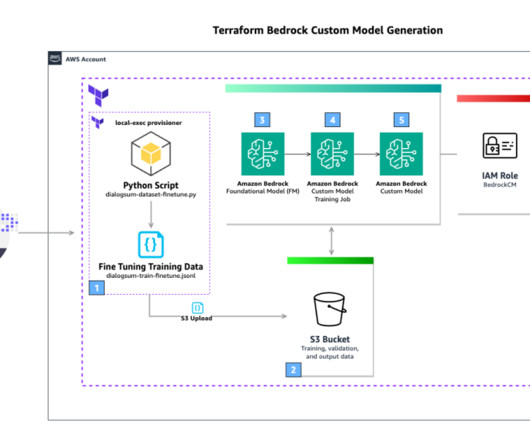

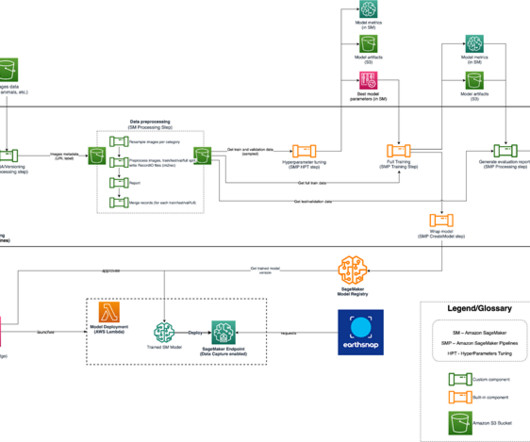

Amazon Bedrock empowers teams to generate Terraform and CloudFormation scripts that are custom fitted to organizational needs while seamlessly integrating compliance and security best practices. This makes sure your cloud foundation is built according to AWS best practices from the start.

Let's personalize your content