Governing the ML lifecycle at scale, Part 3: Setting up data governance at scale

AWS Machine Learning

NOVEMBER 22, 2024

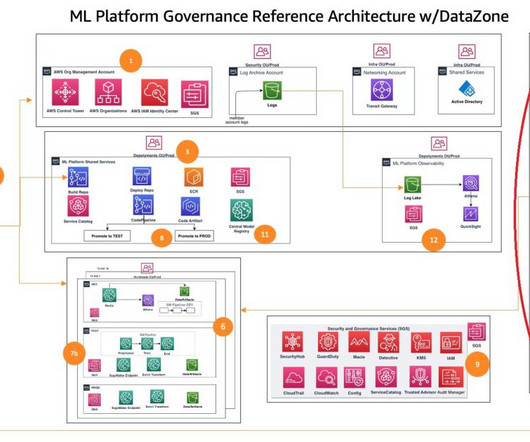

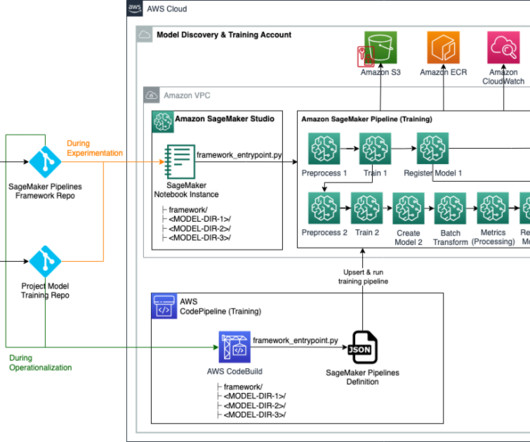

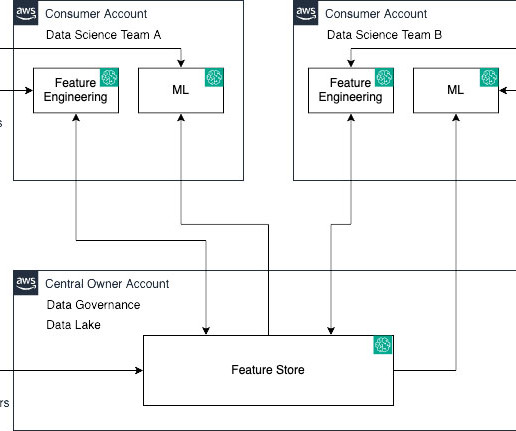

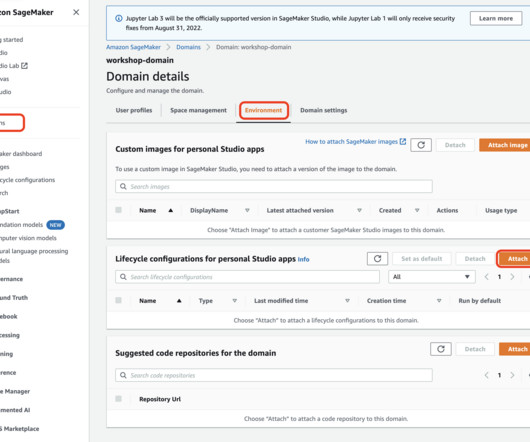

This post is part of an ongoing series about governing the machine learning (ML) lifecycle at scale. This post dives deep into how to set up data governance at scale using Amazon DataZone for the data mesh. However, as data volumes and complexity continue to grow, effective data governance becomes a critical challenge.

Let's personalize your content