Amazon Bedrock Flows is now generally available with enhanced safety and traceability

AWS Machine Learning

NOVEMBER 22, 2024

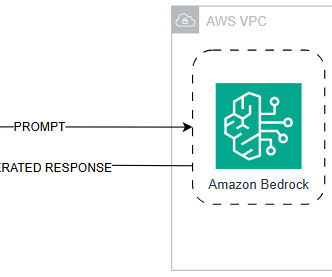

Today, we are excited to announce the general availability of Amazon Bedrock Flows (previously known as Prompt Flows). With Bedrock Flows, you can quickly build and execute complex generative AI workflows without writing code. Key benefits include: Simplified generative AI workflow development with an intuitive visual interface. Seamless integration of latest foundation models (FMs), Prompts, Agents, Knowledge Bases, Guardrails, and other AWS services.

Let's personalize your content