Reducing hallucinations in LLM agents with a verified semantic cache using Amazon Bedrock Knowledge Bases

AWS Machine Learning

FEBRUARY 21, 2025

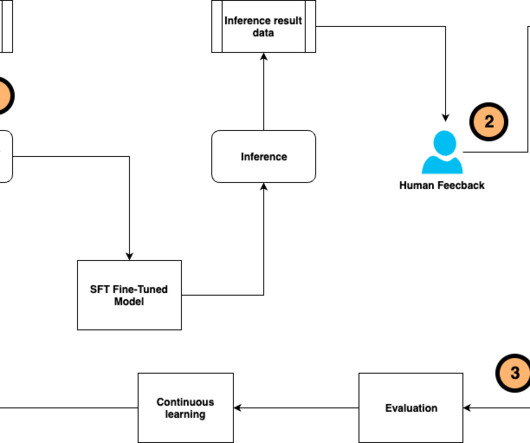

Large language models (LLMs) excel at generating human-like text but face a critical challenge: hallucinationproducing responses that sound convincing but are factually incorrect. While these models are trained on vast amounts of generic data, they often lack the organization-specific context and up-to-date information needed for accurate responses in business settings.

Let's personalize your content